Lizzie00

😎

- Location

- Down South

So i know how this makes me sound lol but i’m interested enough in any replies to throw caution to the wind…

I first learned of ChatGPT right here on Senior Forums. I thought it was pretty cool & have spoken with ChatGPT (free version) numerous times since then finding it to be an effective virtual handyman with info on any topic.

Today i visited it to learn about dash cams. We were having a fairly in-depth conversation and then the screen went blank, conversation lost. So i began again with a brief summary and it was going to give me recommendations for purchase and installation. It didn’t ask me where i live nor did i mention which city or state that i live in. It simply ‘knew’ the next 2 towns closest to me and mentioned them by name.

I then asked how it knew my location. It replied that i must have mentioned where i lived. (I didn’t.) The screen then went blank again & the conversation was lost for a second time. Being the diligent peep that i am i went back a third time and asked again how it knew where i lived, same story that it had no way of knowing unless i had mentioned it.

i went back a third time and asked again how it knew where i lived, same story that it had no way of knowing unless i had mentioned it.

So then it says let me know if you’d like to review the earlier messages or clarify anything else. And i said well i don’t have access to the earlier messages, do you? The response was I don’t have access to our past conversation history.

3 questions:

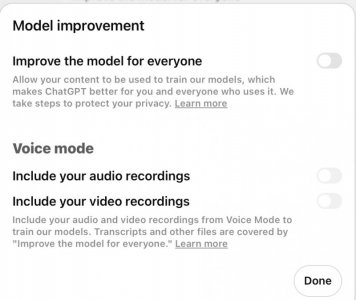

1 - Did ChatGPT likely acquire my general location via my

IP address…or…???

2 - Why would ChatGPT so vehemently deny being able to

determine my location on its own?

3 - Why would ChatGPT have initially offered to let me review the

earlier messages if in fact they hadn’t kept those messages?

Your thoughts?

I first learned of ChatGPT right here on Senior Forums. I thought it was pretty cool & have spoken with ChatGPT (free version) numerous times since then finding it to be an effective virtual handyman with info on any topic.

Today i visited it to learn about dash cams. We were having a fairly in-depth conversation and then the screen went blank, conversation lost. So i began again with a brief summary and it was going to give me recommendations for purchase and installation. It didn’t ask me where i live nor did i mention which city or state that i live in. It simply ‘knew’ the next 2 towns closest to me and mentioned them by name.

I then asked how it knew my location. It replied that i must have mentioned where i lived. (I didn’t.) The screen then went blank again & the conversation was lost for a second time. Being the diligent peep that i am

So then it says let me know if you’d like to review the earlier messages or clarify anything else. And i said well i don’t have access to the earlier messages, do you? The response was I don’t have access to our past conversation history.

3 questions:

1 - Did ChatGPT likely acquire my general location via my

IP address…or…???

2 - Why would ChatGPT so vehemently deny being able to

determine my location on its own?

3 - Why would ChatGPT have initially offered to let me review the

earlier messages if in fact they hadn’t kept those messages?

Your thoughts?